Machine learning optimization, the formulations

N, the sample size, and D, the dimension of the considered functions and examples are quite large for many deep learning applications (e.g. D = pixel size 1024 × 1024). We consider more mathematical formulas for more optimization schemes.

For Linear Regression optimization, least-square regression suggests:

lasso (least absolute shrinkage and selection operator) suggests (c>0):

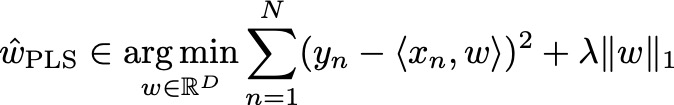

𝓁₁-penalized least squares estimator (λ>0):

The above examples are ℝ^D → ℝ problems, for binary regression ℝ^D → {−1, +1}, logistic regression suggests:

Whereas the support vector machine suggests:

Why focus on convex, online, gradient descent.

Convexity

Convexity very useful: Theoretical tractability; very prevalent in applications; Theories for non-convex are scarce, ad hoc, and based on convex.

The scalability concern: online+gradient

The above optimization formulations are to be approximated via iterative algorithms. The time complexity to have low dependence on N and D.

Compare online gradient descent, gradient descent, and Newton’s method.

We find online > gradient >> Newtons method, in terms of scalability.

(Nevertheless, this is a superficial comparison.)